Automating Kubernetes Host-Management with Rancher System-Upgrade-Controller

Managing Kubernetes clusters at scale can be challenging, especially when it comes to keeping the underlying hosts up-to-date. The Rancher System-Upgrade-Controller simplifies this process by automating host upgrades in a Kubernetes-native way. This tool leverages Kubernetes resources to orchestrate upgrades, ensuring minimal downtime and consistent configurations across your cluster.

In this article, we will explore how the System-Upgrade-Controller works, its key features, and how to set it up to streamline your Kubernetes host-management tasks. Whether you’re managing a small cluster or a large-scale environment, this guide will help you automate upgrades efficiently and securely.

What is the System-Upgrade-Controller?

Rancher’s System-Upgrade-Controller is a Kubernetes-native controller that lets you manage your Kubernetes hosts using Kubernetes CRDs. Whether it is upgrading your OS dependencies or the Kubernetes version itself, the System-Upgrade-Controller provides a wide range of options to simplify these tasks.

How Does the System-Upgrade-Controller Work?

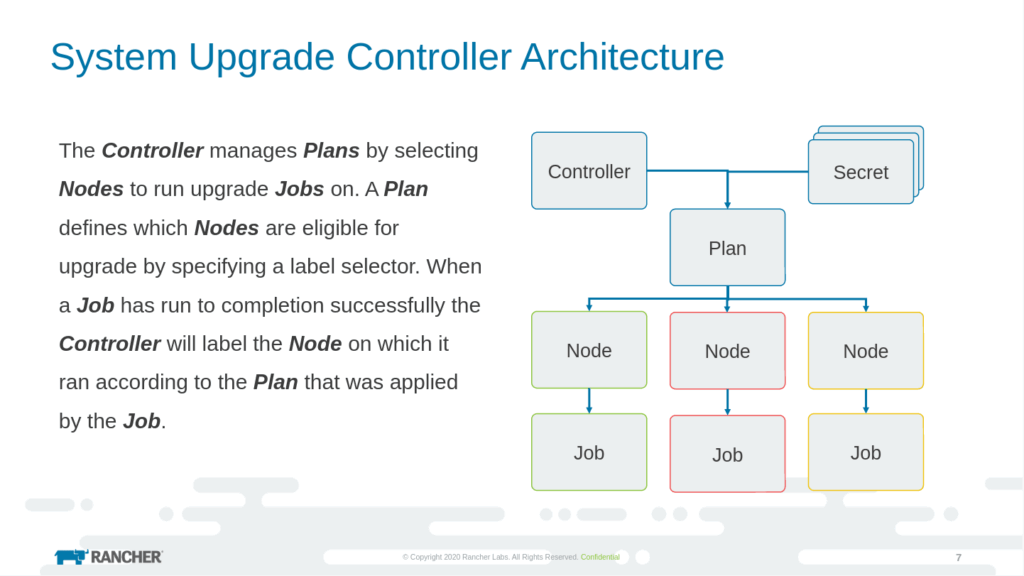

The System-Upgrade-Controller monitors the plans.upgrade.cattle.io CRD. These plans specify the actions to be performed and the target nodes. Once a plan is applied to eligible nodes, the controller labels the nodes with a hash of the plan’s configuration. This mechanism ensures that each plan is executed only once per node for a given configuration, preventing redundant upgrades.

The System-Upgrade-Controller operates in a straightforward workflow:

- Plan Creation: Define a plan using the

PlanCRD, specifying the upgrade job by declaring for ex. image, version, and node selection criteria. - Plan Execution: The controller applies the plan to eligible nodes while ensuring a controlled rollout.

- Node Labelling: Once a node is upgraded successfully, it is labelled with the plan’s hashed configuration to prevent redundant executions

Why Do I Need It?

While you could manage upgrades using things like Kubernetes Jobs or Ansible, the System-Upgrade-Controller offers a Kubernetes-native approach while keeping things simple. By using CRDs, you can integrate upgrades declaratively through GitOps workflows. Additionally, when new nodes are added to the cluster, they automatically receive the appropriate upgrade plans, ensuring consistency across your infrastructure.

Getting Started with SUC

Installation SUC

To install the System-Upgrade-Controller, apply the following manifests. Installing the System-Upgrade-Controller is straightforward. Run the following commands to deploy the controller and its CRDs:

kubectl apply -f https://github.com/rancher/system-upgrade-controller/releases/latest/download/system-upgrade-controller.yaml

kubectl apply -f https://github.com/rancher/system-upgrade-controller/releases/latest/download/crd.yamlNow we can start with the planning.

Note: The plans must be created in the same namespace where the controller was deployed.

Example Plans

Upgrading Kubernetes Versions

Here’s an example of a plan to upgrade an RKE2 cluster. This plan upgrades Kubernetes control-plane and worker nodes using SUC.

apiVersion: upgrade.cattle.io/v1

kind: Plan

metadata:

name: server-plan

namespace: system-upgrade

labels:

rke2-upgrade: server

spec:

# specify the image and version of the container running the upgrade

upgrade:

image: rancher/rke2-upgrade

version: v1.32.2+rke2r1

# how many nodes will run the upgrade simultaneously

concurrency: 1

# enable/disable cordon on node

cordon: true

serviceAccountName: system-upgrade

# which nodes the plan is targeted for

nodeSelector:

matchExpressions:

- key: node-role.kubernetes.io/control-plane

operator: In

values:

- "true"

tolerations:

- effect: NoExecute

key: CriticalAddonsOnly

operator: Equal

value: "true"

- effect: NoSchedule

key: node-role.kubernetes.io/control-plane

operator: Exists

- effect: NoExecute

key: node-role.kubernetes.io/etcd

operator: Exists

---

apiVersion: upgrade.cattle.io/v1

kind: Plan

metadata:

name: agent-plan

namespace: system-upgrade

labels:

rke2-upgrade: agent

spec:

# specify the image and version of the container running the upgrade

upgrade:

image: rancher/rke2-upgrade

version: v1.32.2+rke2r1

# init-container which will wait for the `server-plan` to be finished before this plan can be applied

prepare:

args:

- prepare

- server-plan

image: rancher/rke2-upgrade

# how many nodes will run the upgrade simultaneously

concurrency: 1

# enable/disable cordon on node

cordon: true

# pass drain options

# drain:

# force: false

# ignoreDaemonSets: true

# # ...

serviceAccountName: system-upgrade

# which nodes the plan is targeted for

nodeSelector:

matchExpressions:

- key: beta.kubernetes.io/os

operator: In

values:

- linux

- key: node-role.kubernetes.io/worker

operator: In

values:

- "true"

- key: node-role.kubernetes.io/control-plane

operator: NotIn

values:

- "true"

When applied to the cluster, the controller registers the newly created plans. It collects all the nodes, matching the selectors, and checks all nodes for corresponding label plan.upgrade.cattle.io/server-plan (control-planes) / plan.upgrade.cattle.io/agent-plan (workers). When seeing that the plan wasn’t applied yet, the controller gets one after another, applying the plan through a Job on the corresponding node. The Job-Pod will run the images and scripts, that will do the work. After that, the node gets uncordened again and labeled with the hash of the plans configuration. Only after these steps, the next node will get patched.

Even if the first worker node gets the Job applied at the same time, the first control-plan node gets patched, the agent-plan is configured to wait for the server-plan to finish before the first node gets cordoned and patched.

Now when the plan changes, the controller will detect mismatch on the hash and the process is starting from the beginning.

Running Bash Scripts

To provide an example how using custom bash scripts in plans could look like, here is another example which will upgrade ubuntu os-packages.

apiVersion: v1

kind: Secret

metadata:

name: upgrade-os

namespace: system-upgrade

type: Opaque

stringData:

# adding bash script

# here could be anything

# ex. adding reboot command if reboot-required

upgrade.sh: |

#!/bin/sh

set -e

apt update

apt list --upgradable

apt upgrade -y

apt autoclean

---

apiVersion: upgrade.cattle.io/v1

kind: Plan

metadata:

name: upgrade-os

namespace: system-upgrade

spec:

# how many nodes will run the upgrade simultaneously

concurrency: 1

# enable/disable cordon on node

# if reboot on reboot-required included into script, set this to true

cordon: false

# specify the image and version of the container running the upgrade

# passing arguments to run the script

upgrade:

image: alpine:latest # change image tag

command: ["chroot", "/host"]

args: ["sh", "/run/cattle-system/secrets/upgrade-os/upgrade.sh"]

# setting version to current date

version: "$(date +'%Y-%m-%d-%H%M')"

# mount script using secret

secrets:

- name: upgrade-os

path: /host/run/cattle-system/secrets/upgrade-os

serviceAccountName: system-upgrade

# which nodes the plan is targeted for

nodeSelector:

matchExpressions:

- key: beta.kubernetes.io/os

operator: In

values:

- linux

tolerations:

- effect: NoExecute

key: CriticalAddonsOnly

operator: Equal

value: "true"

- effect: NoSchedule

key: node-role.kubernetes.io/control-plane

operator: Exists

- effect: NoExecute

key: node-role.kubernetes.io/etcd

operator: ExistsApply this yaml using the following, to process the date insertion.

cat <<EOF | kubectl apply -f -

# yaml

EOFUse Cases for SUC

Beyond upgrading Kubernetes versions, the System-Upgrade-Controller can be leveraged for various host-management tasks, including:

- OS Dependency Upgrades: Keep operating system packages up-to-date by executing upgrade scripts or package management commands.

- Custom Scripts Execution: Support Kubernetes upgrades using

kubeadmor other custom workflows. - Host Configuration Management: Apply security patches, update configuration files, and install required software packages across nodes.

Taking Automation to the Next Level

To push automation even further, consider integrating the System-Upgrade-Controller with an automated dependency management tool like Renovate. Renovate can monitor your dependencies, including Kubernetes versions and container images, and automatically create pull requests or updates when new versions are available. By combining Renovate with SUC, you can establish a fully automated pipeline that not only identifies updates but also applies them seamlessly across your infrastructure.

Benefits of Integration

- Proactive Updates: Stay ahead with the latest features, performance improvements, and security patches without manual intervention.

- Consistency Across Environments: Ensure all nodes in your cluster are running the same versions, reducing configuration drift.

- Reduced Operational Overhead: Automating both detection and application of updates minimizes the time spent on routine maintenance tasks.

Example Workflow

- Dependency Monitoring: Renovate scans your repositories for outdated dependencies, including Kubernetes manifests and container images.

- Update Proposal: Renovate creates pull requests with updated versions, which can be reviewed and merged.

- Automated Rollout: Once merged, SUC applies the updates to your cluster nodes based on predefined plans, ensuring a controlled and reliable rollout.

By combining these tools, you can create a robust, hands-off system for managing your Kubernetes infrastructure, allowing your team to focus on higher-value tasks.

Conclusion

The Rancher System-Upgrade-Controller is a powerful tool that automates Kubernetes host management while ensuring consistency, and efficiency. By leveraging Kubernetes-native CRDs, it simplifies the upgrade process and reduces the need for manual intervention. Whether managing a small cluster or a large-scale environment, SUC helps maintain an up-to-date and resilient infrastructure.

Need help with Kubernetes automation?

We support companies in designing, implementing, and operating Kubernetes clusters – including upgrade strategies and GitOps integration.

Get in touch with us to discuss how we can support your infrastructure goals.

You need to load content from reCAPTCHA to submit the form. Please note that doing so will share data with third-party providers.

More Information