MLOps

Achieve the greatest possible level of automation.

From DevOps to MLOps

DevOps was widely adopted in the past decade for developing, testing, deploying, and operating large-scale conventional software systems. With DevOps, development cycles became shorter, deployment speed increased, and system releases became auditable and dependable.

Moreover, with the advances made in the past few years in ML and AI, Machine Learning (ML) has revolutionized the world of computers by allowing them to learn as they progress forward with large datasets. Thus, mitigating many previous programming pitfalls and creating new possibilities/use-cases that conventional programming cannot resolve. Unfortunately, applying DevOps principles directly to Machine learning development is not possible. Because unlike DevOps where the final product is solely dependent on code.

ML development is code and data dependent. Moreover, ML models’ development is experimental in nature and has more components that are significantly more complex to build and operate. It is worthy to note that the time spent writing ML code is Approx. 30% of the data scientists’ time, the rest is data analysis, feature engineering, experimenting with different hyperparameters, utilizing remote hardware to run multiple experiments in parallel, experiment tracking, model serving, and many more

What is MLOps?

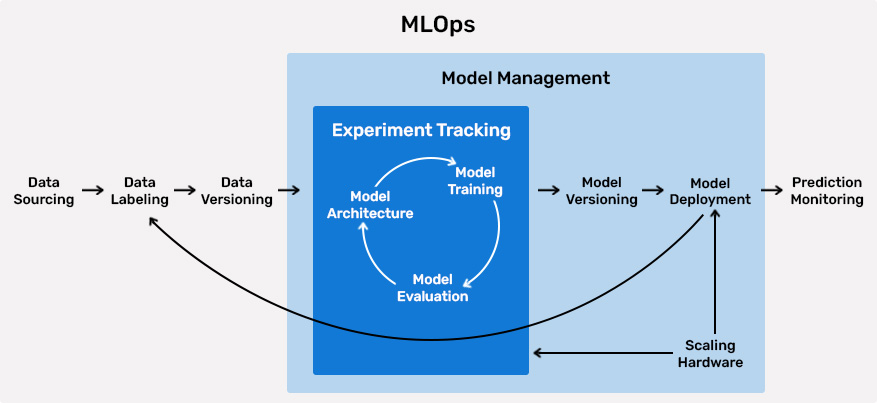

That brings us to MLOps. It was founded at the intersection of DevOps and Machine Learning. Therefore, MLOps is modeled after DevOps. However, it adds data scientists and machine learning engineers to the team. Machine learning operations intend to automate as much as possible, from data sourcing to model monitoring and model re-training.

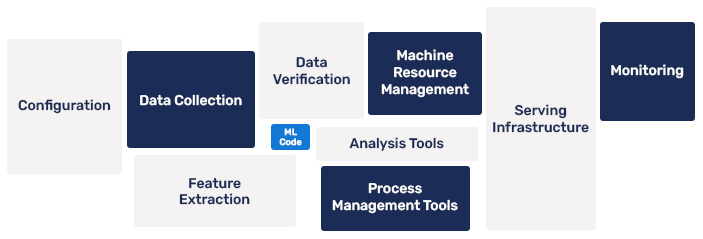

MLOps abstracts the complex infrastructure (bare metal, virtualized cloud, container orchestration) with diverse hardware (CPU, GPU, TPU) and diverse environment needs – libs, frameworks and provides a set of interoperable tools that accelerates ML Model development in all its phases.

A brief intro into the key phases of MLOps

Contact us!

We’re here for you

"*" indicates required fields