Data Lakehouse

Get the best of both worlds: Data Warehouse and Data Lake.

Unlock the next level of business decision-making.

Emergence data warehouse

For many years, businesses have utilized data analytics tools like data warehouses, data lakes, and data marts to help make informed decisions. These platforms collect and store all of a company’s data in one place, enabling thorough analysis. This allows, for example, a deeper insight into operational processes and therefore supports your business intelligence initiatives. Additionally, it reveals cost savings opportunities and previously undetected issues that could not be addressed through limited analysis. Incorporating machine learning processes can deepen the exploration of data structures and links, providing essential competitive advantage through gained intelligence.

Therefore, in the 1980s, the concept of the data warehouse was set up. In this process, the data is aggregated via ETL processes, consolidated, and reformatted into a scheme, which is suitable for the desired analytics. This process removes much of the information contained in raw data, preventing realization of future analytics that could use the data.

Very high costs due to cold data

A legacy data warehouse faces the challenge of linear correlation between the required amount of RAM and CPU and the amount of stored data. This characteristic of classic database systems results in high costs for infrequently used data, known as cold data. Organizations may resort to discarding large amounts of this data, despite its potential future value. Additionally, traditional database systems are designed to handle only one type of data structure, and any differently structured data must be stored in separate external systems.

The next evolutionary step: the introduction of classic data lakes

The outlined problems led to the development of data lakes in the late 2000s. The concept of a data lake is to split up the basic features of a database into several highly scalable systems. Data lakes have scalable file storage (e.g., Amazon S3) to persist data, at least one database to manage metadata, and at least one query engine as well as other components. This segregation allows the storage of structured, semi-structured, and unstructured data on a consolidated platform. Furthermore, the query engine requires only the computing power, which is needed to process the queried data. This fact results in cold data and raw data being able to be stored cost-effectively in a data lake. However, classic data lakes have limited data management functionality which is clearly sub-optimal when compared to classical databases or data warehouses. Therefore, data lakes are used as storage for raw data which is then transformed, enriched, and loaded to classic DWHs and data marts.

Disadvantages of a conventional data lake

Utilizing both a data lake and a data warehouse presents several challenges. The data analysis and data engineering teams must closely collaborate to determine the necessary data and its schema, leading to substantial communication overhead. Modifying the schema once it has been established in the data warehouse can also prove to be a complex process. Ultimately, the lack of a comprehensive view of raw data in the data lake can result in missed opportunities for identifying additional use-cases by the data analysis team.

Additionally, if not properly maintained, a data lake can quickly become ineffective and turn into a “data swamp” due to factors such as incorrect data structures, broken relations between data, a large volume of data, and difficulty in locating relevant information.

Furthermore, the absence of ACID transactions can result in poor data quality, rendering the data lake ineffective for your organization.

Other disadvantages are, maintenance effort on multiple systems, analytics on outdated data due to lengthy ETL processes (82% of all analysts use outdated data), or a limited support of machine learning analytics, because they can only be run against raw data in the data lake.

Due to these issues, a new architecture was introduced in 2020, which solved the problems mentioned above—the Lakehouse.

The Lakehouse architecture

The Lakehouse combines a data lake’s economy with a data warehouse’s performance and management features.

As previously noted, a data lake serves as the storage for raw data. However, it is now enhanced with a data management and governance layer called the delta lake.

This layer employs a Spark library developed by Databricks that adds database management system (DBMS) capabilities to the data lake, like those found in a data warehouse (DWH). The features include, among others, ACID transactions, version control, enforcement of data structures, metadata management, and rollback functionality.

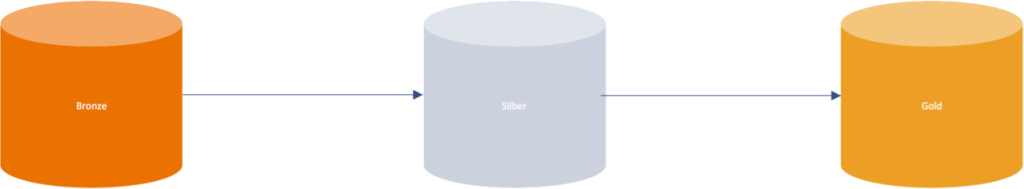

In addition to these features, the raw data must be preprocessed for advanced analytics. The delta lake adopts a multi-layer approach that structures raw data through incremental transformations using tables, referred to as delta tables.

Raw and historical data

The incoming data from stream or batch processing are initially captured in raw format (bronze table).

Filtered, cleaned and enriched data

In the next step, the bronze table is filtered, cleaned up, and purged. The raw data, which has been normalized in this way, are stored in the silver table, and can be queried from there.

Data prepared for analysis

In a last step, silver tables can be aggregated. These business level aggregations are stored in the gold table. They form the foundation for analytics and reports and allow for efficient data queries.

Delta tables and MPP query engines

The information in delta tables, along with their details, is stored in the object storage as immutable parquet files. A separate transaction log is created for each delta table, which records all transactions in immutable JSON files. The log also includes other information, like the time and the person responsible for the transaction, for audit purposes. If there are any changes or deletions made to the data, the parquet files are not erased, but only marked as deleted. This makes it possible to undo changes easily.

The update of these delta tables can be triggered either by the presence of new data through real-time processing or by a predetermined schedule, such as daily. The transformations are automatically reapplied in the event of changes, ensuring that the data in the gold table is always current and of high quality. The update pipeline for the table can remain operational at all times, which is not achievable with a traditional data warehouse (DWH).

Another aspect of the Lakehouse architecture is the use of innovative massively parallel processing (MPP) query engines, such as Trino. These external and distributed query engines process data in memory, enabling lightning-fast queries. With metadata storage and optimized data structures, the query performance of a DWH can be achieved.

These MPP query engines can be directly integrated with the data lake, allowing the benefits of both data lakes and data warehouses to be combined within the Lakehouse framework.

Contact us!

We’re here for you

"*" indicates required fields