When do you need AI agents, and when is a copilot enough?

A practical decision framework for organizations

“Copilots increase efficiency. AI agents change responsibility – because they don’t just respond, they act.“

Anastasios Ntaflos

Business Area Lead Modern Work | Microsoft MVP | evoila

Generative AI has firmly arrived in everyday work. Many employees already use it as a matter of course — to draft emails, summarize meetings, or structure presentations. With Microsoft 365 Copilot in particular, knowledge and text-based work has become significantly more efficient.

But in conversations with customers, I keep hearing the same question more and more often:

Is a copilot enough — or do we need AI agents?

It’s a valid question. While copilots deliver impressive productivity gains today, they reach their limits in more complex scenarios. At the same time, expectations around AI agents are rising rapidly — often without a clear decision framework.

This article aims to change that. It provides a practical way to distinguish copilot use cases from agent-based scenarios and to make informed decisions instead of following the hype.

Copilot vs. AI Agent: A fundamental difference

At its core, the difference is simple:

A copilot reacts. An AI agent acts.

A copilot responds to individual prompts: “Summarize this document”, “Draft a reply to this email”, “Create an agenda from my notes”. That is incredibly valuable — but always point-in-time.

An AI agent, by contrast, behaves more like a digital employee. It understands a goal, plans the necessary steps, interacts with multiple systems, evaluates intermediate results, and adapts its approach along the way. Ideally, it also learns continuously from feedback.

In practice, this means:

- Copilots support people in completing tasks.

- AI agents take on partial responsibility for how work gets done.

Whether that shift makes sense has little to do with enthusiasm for technology — and everything to do with three very concrete criteria.

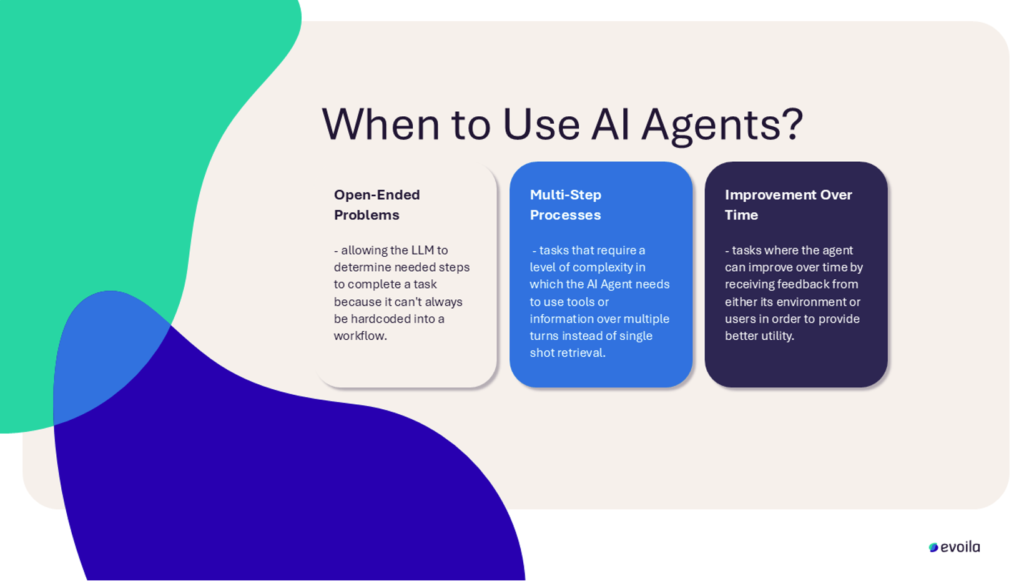

Open-ended problems: When the path to the goal isn’t clear

Many business processes are well defined: fill out a form, request approval, save a record — done. These workflows are perfectly suited for classic automation, low-code platforms, or RPA.

Challenges arise when the path to the outcome cannot be fully predicted in advance. This is where we encounter open-ended problems.

An open-ended problem exists when:

- the required steps cannot be fully predefined,

- decisions depend heavily on context,

- and human experience has traditionally played a central role.

Example: IT Support

An IT ticket is submitted in free text:

“My application hasn’t been working properly since this morning.”

A copilot can help by surfacing relevant documentation or FAQs. But the real work starts after that:

- Which system is affected?

- Are there logs or known incidents?

- Are other users impacted as well?

- Is this a configuration issue, an update, or an external outage?

An AI agent can analyze the situation, combine information from multiple systems, form hypotheses, and plan the next steps — instead of merely delivering information.

Example: Project Assistance

In customer projects, priorities change constantly. A project assistant may need to remind stakeholders, escalate risks, coordinate meetings, or initiate change requests. The next best action depends on project status, risk level, and stakeholder context — not on a fixed flowchart.

Rule of thumb:

As soon as a process feels more like a consultation or clarification dialogue than like filling out a form, an AI agent is often superior to rigid automation.

Multi-step processes: When a single answer isn’t enough

The second dimension is process complexity. Many valuable AI use cases don’t consist of a single interaction, but of several logically connected steps.

Multi-step processes typically involve:

- multiple actions in sequence,

- different systems,

- and intermediate results that influence what happens next.

Example: B2B Quote Creation

A realistic process often looks like this:

- Retrieve customer data and history from the CRM

- Extract requirements from emails or meeting notes

- Incorporate product and pricing data from the ERP

- Generate a customized proposal document

- Obtain internal approvals

- Store the document in a compliant manner and document delivery

Today, this is often fragmented: a bit of copilot support here, manual steps there, lots of coordination via email.

An AI agent can orchestrate this end-to-end. It understands the objective, executes the steps, asks clarifying questions when needed, and ensures a consistent outcome.

Example: Employee Onboarding

From account creation and permissions to hardware provisioning and coordination with departments — onboarding is a classic multi-step process with many dependencies. An agent can automatically derive the necessary actions from HR data and role profiles, including reminders when information is missing.

The key takeaway:

Traditional automation handles individual steps well. AI agents become valuable when a system is needed that understands and manages the entire process.

Improvement over time: when the agent learns

The third — and often underestimated — dimension is the ability to improve over time. A static bot is only as good as its initial configuration. An AI agent, however, can learn from feedback.

This learning happens on multiple levels:

- Domain knowledge: User corrections refine classification and decision logic.

- Process optimization: Repeated manual interventions highlight improvement potential.

- User preferences: Team- or role-specific patterns influence future decisions.

Example: Ticket Triage

An agent initially classifies support tickets based on rules. Service desk staff correct misclassifications. This feedback steadily improves accuracy. After a few weeks, error rates drop significantly.

Example: Sales

A sales agent prioritizes leads. Feedback on actual deal outcomes helps it learn which signals truly matter. The result: focus shifts to leads with real potential.

For organizations, this means investing not in one-off automation, but in systems that evolve alongside the business.

A simple decision framework

From these three dimensions, a pragmatic decision framework emerges:

- Is the problem open and not fully predefined?

- Does the use case span multiple steps, systems, or roles?

- Are there feedback loops from which the agent can learn?

The more often the answer is “yes,” the more sense it makes to use an AI agent — and the less sufficient a pure copilot approach becomes.

Conclusion: From hype to clarity

AI agents are not the answer to every automation challenge. Where processes are clear, stable, and tightly regulated, classic workflow automation combined with copilot support is often the best solution.

But wherever tasks are open-ended, span multiple steps, and benefit from experience, AI agents can become true sparring partners in everyday work.

At evoila, we repeatedly see that organizations which consciously make this distinction move faster from experimentation to measurable business results. If this question is currently relevant for your organization, we invite you to get in touch — often a short conversation is enough to create clarity.

Final thought:

Which of your processes still feel “humanly complex” today? That is often where the greatest leverage for AI agents lies — not as a replacement, but as an intelligent augmentation of your teams.

You need to load content from reCAPTCHA to submit the form. Please note that doing so will share data with third-party providers.

More Information